Kubernetes 版本與 Docker 版本的選定是參考 Kubernetes 的 ChangeLOG,近兩年由於 Dockershim 的棄用,在官方文件裡能看到最後一版原生支持 docker 的版本為 Kubernetes v1.21.x,也是本例所採用的版本,在 Kubernetes v1.24.x 後的版本裡若要使用 docker 作為 container runtime,則需搭配 Mirantis 釋出的 cri-dockerd 來與 Kubernetes 介接。

Kubernetes 導入 CRI 標準後,docker 不再是 container runtime 的唯一選擇,網路上全新安裝 Kubernetes 的文章很多,大家也開始使用了不同的 container runtime 來搭配,一時間難以說哪個選擇是更合適的,而我個人的想法是…當多數的 RD 都還在使用 docker 或 docker-compose 的時候,docker 就是目前最合適我的選擇。

實驗環境

主機安裝的作業系統皆為 CentOS 7.9x64

Kubernetes Cluster 安裝步驟

所有機器創建一個普通用戶

[root@host ~]# adduser vagrant && passwd vagrant所有機器配置 hostname 並寫入 hosts 檔案

[root@host ~]# cat >> /etc/hosts << EOF

192.168.200.160 k8s-master

192.168.200.161 k8s-node1

192.168.200.162 k8s-node2

EOF

[root@host ~]# hostnamectl set-hostname k8s-xxxxxx所有機器編寫並執行下列兩個 shell scrip:

< install-k8s-env.sh >

#!/bin/bash

# this script is used to create k8s cluster on CentOS7.9

# 2025-06-024 by tomyshen

yum install -y nano git wget epel-release

yum install -y jq

yum install -y nfs-utils

yum install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum clean all && yum repolist

yum install -y docker-ce-20.10.9 docker-ce-cli-20.10.9 --nogpgcheck

# swap off

sed -i '/swap / s/^\(.*\)$/#\1/g' /etc/fstab

wapoff -a

# needed by K8S

modprobe br_netfilter

echo "br_netfilter" > /etc/modules-load.d/br_netfilter.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -p

# SSH Server configration needed by K8S

sed -i 's/#AllowTcpForwarding/AllowTcpForwarding/g' /etc/ssh/sshd_config

systemctl reload sshd

# firewall off

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

# timedate configration

timedatectl set-timezone Asia/Taipei

yum install -y chrony

systemctl start chronyd && sudo systemctl enable chronyd

# start and enable docker service

systemctl start docker

systemctl enable docker

# add vagrant user to docker gorup for K8S

usermod -aG docker vagrant< install-k8s-node.sh >

#!/bin/bash

# this script is used create k8s cluster on CentOS7.9 minimal

# 2025-06-24 by tomyshen

# install k8s

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.21.14 kubeadm-1.21.14 kubectl-1.21.14 \

--disableexcludes=kubernetes \

--nogpgcheck

systemctl enable kubelet.serviceGoogle 提供的 el7 kubernetes 源已經失效,本例使用阿里提供的 kubernetes 源

到 Master 機器上初始化 k8s,advertise-address 填上 master 機器的 IP

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.200.160 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --kubernetes-version=v1.21.14 --cri-socket="/var/run/dockershim.sock"Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.200.160:6443 --token a8ljf1.39wgxyzavxa94lp0 \

--discovery-token-ca-cert-hash sha256:e3600e527af5779bd8bb2c83bfcf4585508a8c661ddbafeec942b488765a54c9記得執行 kubeadm 要我們輸入的這三行

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config安裝通用型網絡 plugin 「Flannel」

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created到其它 WorkerNode 上執行上面提供的指令以加入 Cluster

[root@k8s-node0x ~]# kubeadm join 192.168.200.160:6443 --token a8ljf1.39wgxyzavxa94lp0 \

--discovery-token-ca-cert-hash sha256:e3600e527af5779bd8bb2c83bfcf4585508a8c661ddbafeec942b488766a54c9

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8s-node01" could not be reached

[WARNING Hostname]: hostname "k8s-node01": lookup k8s-node01 on 168.95.1.1:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.於 Master 上觀察 Cluster 中的各 node 狀態

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 3m31s v1.21.14

k8s-node01 Ready <none> 100s v1.21.14

k8s-node02 Ready <none> 88s v1.21.14加入叢集 token 的時效僅有 24HR,若之後有無法加入的 node,需重新生成 token 才得以加入

[root@k8s-master ~]# kubeadm token create

03pcq8.ihvzmt2jo1gzwuei

[root@k8s-master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

c253e72b670c0766b9373f4632ce1d9ca38efd5297e01d6172ec7da62c0f1b22若想讓除了 master 以外的其他 nodes 可於本機上使用 kubectl 指令,可進行以下操作達成

[root@k8s-master ~]# scp -rp ${HOME}/.kube/ root@192.168.200.162:${HOME}/安裝 Dashboard 儀表板頁面

修改 yaml 設定檔並安裝 dashboard

[root@k8s-master k8s-yaml]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml [root@k8s-master k8s-yaml]# mv recommended.yaml kubernetes-dashboard.yaml [root@k8s-master k8s-yaml]# nano kubernetes-dashboard.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace:kube-system

namespace:kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32222

selector:

k8s-app: kubernetes-dashboard注意添加 nodePort 32222 是給 dashabord 頁面的登入埠號,你可任意改動

[root@k8s-master k8s-yaml]# kubectl apply -f kubernetes-dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-創建一個給 dashboard 登入的管理者帳戶

[root@k8s-master k8s-yaml]# nano admin-sa.ymlkind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

namespace: kubernetes-dashboard

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile查找 token 以登入 kubernetes dashboard

[root@k8s-master ~]# kubectl get secret `kubectl get secret -n kubernetes-dashboard | grep admin-token | awk '{print $1}'` -o jsonpath={.data.token} -n kubernetes-dashboard | base64 -d

eyJhbGchOiJSUzI1NiIsImtpZCI6InRNOZpubGFuVm5qbVp1eVBBTjZ5RkhFMzJFcmdzZmhCLTdkTkd1WkpyVHMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL8NlcnZpY2ZhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm2ldGVzLFRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmK0Lm5hbWUiOiJhZG1pbi10b2tlbi0yNTQ0YiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjlhNjk0NjhmLWRiZWYtNDdhOS05NzQ5LTUyNTc0YTU3Yjc4YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.kLjiAqGxw26BzBFfs2wW9XgyRXm6StVJyF_jyd0WzTzuPEi0IZLdUpqGrNf52xJtJc_afUROaaGrLNr3oZkD0hU-UgeuExZ-UfrsBzouX7ubV8eFRG6QyCmktD_Dc56pJtboPFEpb2LpQeBthWsD2JD1u5VX7XRlcYEcS5MydNUO4nZ_mRIaLTAnt5a3a_ZVcyTyqc7ORCgmOMKzkpRxvCKQDqpQ1lnU9ubqg6zhZa9JTVtU_Rm4kU9pkTPuzWvscQ2bCksyDS4nR15lxxcL2SsiQ4R6BV45leBHw4FqGD1XkkZ-mFm0_kf0AlDqSMCAWuOg內網 Firefox 瀏覽器網址列中輸入 http:// 任一node IP:32222

預設 token 的生存時間是 900秒,挺影響使用體驗的,這邊我們將 deployment 的 token-ttl 修改為 3600秒

或是命令行去新增 toekn-ttl 參數

[root@k8s-master ~]# kubectl edit deployment kubernetes-dashboard -n kubernetes-dashboard安裝 metric-server 監控相關資源

參考 metric-server 官方 github README 說明,本次使用的 kubernetes 版本為 v1.21,因此安裝 Metrics Server v0.7.x 版本

安裝 metric-server 指標服務

[root@k8s-master ~]# kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.7.2/components.yaml經過了許 7分多鐘發現 metrics-server 都無法佈署完成...

[root@k8s-master ~]# kubectl get deployment -n kube-system

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system coredns 2/2 2 2 75m

kube-system metrics-server 0/1 1 0 7m40s修改 metric-server 定義 YAML 檔案中的參數以避免測試環境出現認證問題

[root@k8s-master ~]# kubectl edit deployment metrics-server -n kube-system找到 containers 參數位置新增一行「--kubelet-insecure-tls」後存檔離開,舊的 deployment 元件會自取滅亡,然後依照新的 YAML 定義去佈署新的 metric-server POD

template:

metadata:

creationTimestamp: null

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls

- --metric-resolution=15s

image: k8s.gcr.io/metrics-server/metrics-server:v0.7.2檢查 metric-server 佈署的狀況,可以 top 到群集裡 node 的資源使用情況即代表 metric-server 已經成功運作了

[root@k8s-master ~]# kubectl get deploy -n kube-system

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system coredns 2/2 2 2 78m

kube-system metrics-server 1/1 1 1 10m

[root@k8s-master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 83m 4% 1101Mi 29%

k8s-node01 21m 0% 521Mi 4%

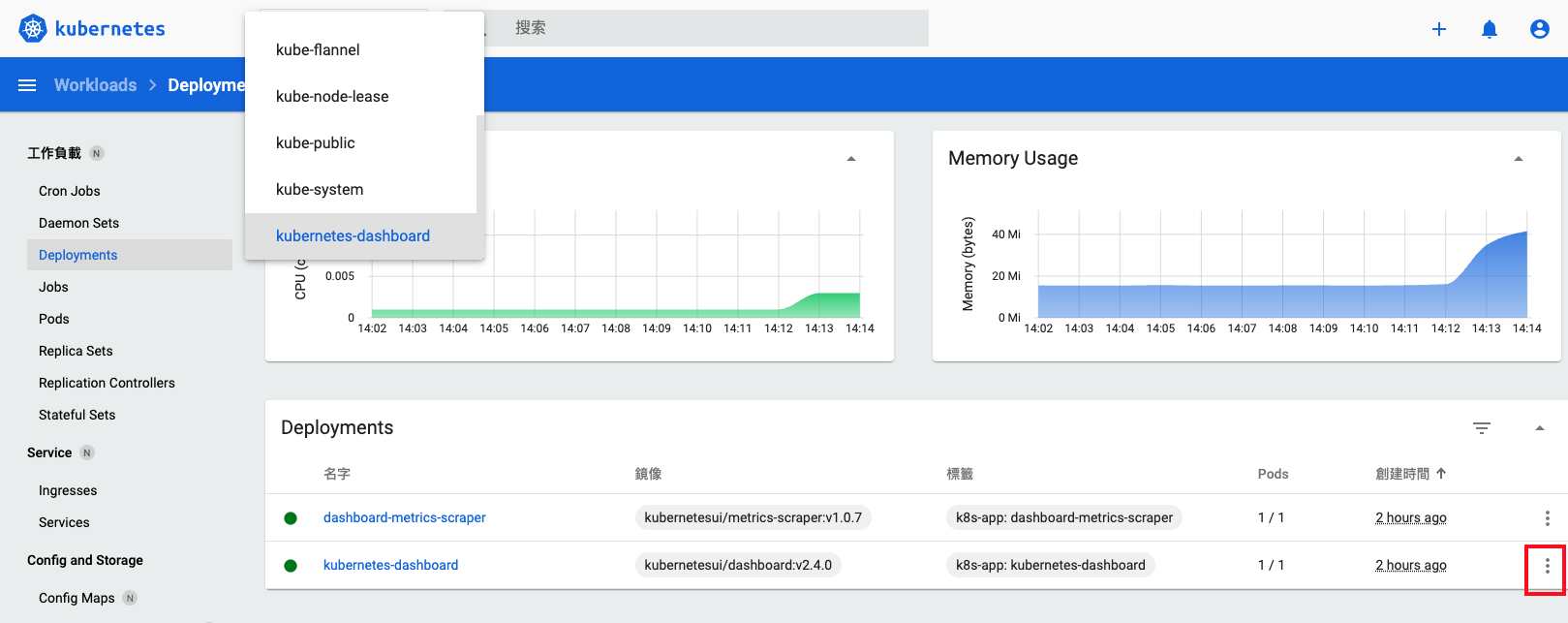

k8s-node02 23m 0% 468Mi 3%原本不存在的 Dashboard 圖形儀表板長出來囉~

helm 安裝 ingress-controller 並實作應用

helm 是 Kubernetes 的包管理工具,可以想像成 yum 之於 Redhat Linux 的關係,以下我們替 kubernetes v1.21.14 安裝 helm(請參閱 Helm 版本支持策略),對應的是 helm v3.9.x 版本

[root@k8s-master ~]# wget https://get.helm.sh/helm-v3.9.4-linux-amd64.tar.gz

[root@k8s-master ~]# tar zxvf helm-v3.9.4-linux-amd64.tar.gz

[root@k8s-master ~]# mv linux-amd64/helm /usr/local/bin/helm

[root@k8s-master ~]# rm -rf linux-amd64/[root@k8s-master ~]# mkdir /root/helm-chart && cd /root/helm-chart

[root@k8s-master helm-chart]# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

[root@k8s-master helm-chart]# helm repo update

[root@k8s-master helm-chart]# helm repo list

NAME URL

ingress-nginx https://kubernetes.github.io/ingress-nginx

# 下載 ingress chart 安裝包並修改配置參數

[root@k8s-master helm-chart]# helm pull ingress-nginx/ingress-nginx --version 4.2.5

[root@k8s-master helm-chart]# tar zxvf ./ingress-nginx-4.2.5.tgz

[root@k8s-master helm-chart]# cd ingress-nginx

[root@k8s-master ingress-nginx]# vi ./values.yamlhostNetwork: true # ingress controller 可以使用節點的主機網路以提供對外訪問,一般是80和443

dnsPolicy: ClusterFirstWithHostNet

publishService: # hostNetwork 模式下设置为false,通过节点IP地址上报ingress status数据enabled: false

kind: DaemonSet # 將deployment更改為DaemonSet

service: # HostNetwork 模式不需要创建serviceenabled: false

defaultBackend:enabled: true

[root@k8s-master ingress-nginx]# helm install ingress-nginx ingress-nginx/ingress-nginx --version 4.12.3 -f ./values.yaml -n ingress-nginx --create-namespace確認安裝狀況

[root@k8s-master ingress-nginx]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller-admission ClusterIP 10.100.122.119 <none> 443/TCP 29s

ingress-nginx-defaultbackend ClusterIP 10.96.25.31 <none> 80/TCP 29s

[root@k8s-master ingress-nginx]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-lzp4d 1/1 Running 0 47s

ingress-nginx-controller-v2s2h 1/1 Running 0 47s

ingress-nginx-defaultbackend-7454494f96-dthd9 1/1 Running 0 47s

[root@k8s-master ingress-nginx]# kubectl exec -it ingress-nginx-controller-5gbcx \

-n ingress-nginx -- /nginx-ingress-controller --version

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.3.1

Build: 92534fa2ae799b502882c8684db13a25cde68155

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.10

-------------------------------------------------------------------------------編寫 yaml 檔案測試 ing 元件的可用性

[root@k8s-master k8s-yaml]# nano ingress-test.yamlapiVersion: v1

kind: Namespace

metadata:

name: dev

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pc-deployment

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.28.0

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: service-clusterip

namespace: dev

spec:

selector:

app: nginx-pod

clusterIP:

type: ClusterIP

ports:

- port: 80 # service所對應的clusterIP的端口

targetPort: 80 # service所對應的pod的端口

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: test.com

namespace: dev

spec:

ingressClassName: nginx

rules:

- host: test.tomy168.com

http:

paths:

- pathType: Prefix

backend:

service:

name: service-clusterip

port:

number: 80

path: /可以看到 cluster 中的兩台 WorkerNode 上、ingress 佔用的80埠號都能提供服務

[root@k8s-master k8s-yaml]# kubectl apply -f ./ingress-test.yaml

[root@k8s-master k8s-yaml]# kubectl get ing --all-namespaces

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

dev test.com nginx test.tomy168.com 192.168.200.161,192.168.200.162 80 59s區網任一電腦的 hosts 檔案裡加入一筆 192.168.200.161 指向 test.tomy168.com 的資料,再透過電腦瀏覽器網址列輸入 http://test.tomy168.com 即可驗證我們的網頁應用服務!

本文內容參閱以下連結:

0 Comments:

張貼留言